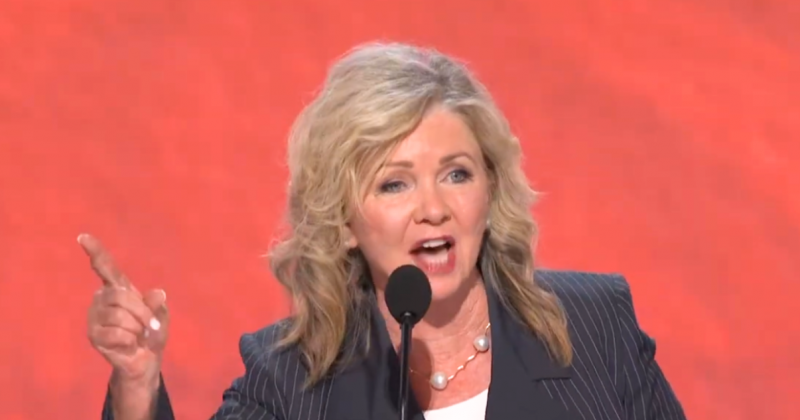

Sen. Marsha Blackburn (R-TN) discovered firsthand the dangers of artificial intelligence when Google’s AI model produced false and defamatory claims about her.

The incident occurred late last month when someone asked Gemma, Google’s AI model, whether there were any rape allegations against Blackburn.

The AI did not simply respond with a “yes” or “no” answer. Instead, the chatbot generated an elaborate fabricated narrative. Blackburn said the AI claimed that during her 1987 campaign for Tennessee state senator, a state trooper alleged that she pressured him to obtain prescription drugs and that their relationship involved non-consensual acts.

The false story appeared credible at first glance. Blackburn stated that Gemma even created fake links to nonexistent news articles to support its claims, though anyone clicking on these links would find they led nowhere.

Blackburn responded forcefully to the incident in an official statement. She declared that this was not a harmless error but “an act of defamation produced and distributed by a Google-owned AI model.”

The senator demanded that Google “shut it down until you can control it.” Google’s response was immediate and revealing: the company pulled the plug on public access to Gemma.

In its statement, Google explained that the Gemma model was designed for developers and was never meant to be a consumer tool or model. The company removed it from AI Studio, its public platform for accessing its AI models.

Google also addressed Blackburn’s broader claim that its AI systems showed a pattern of bias against conservative figures. The company’s response acknowledged a more fundamental issue—that hallucinations are inherent to large language model technology itself.

The senator’s position gave her leverage that ordinary citizens lack. However, her experience points to significant legal challenges that will emerge as AI technology becomes more widespread.

This summer brought another high-profile case when a Minnesota solar company filed a defamation lawsuit against Google.

The firm, Wolf Solar Electric, sued after Google’s AI Overviews falsely stated that the business was under investigation by regulators and had been accused of deceptive business practices.

These false claims were supported by fabricated citations. Wolf Solar Electric alleged that it suffered business losses because of these AI-generated falsehoods.

Recent reporting from the New York Times reveals that at least six defamation cases have been filed in the United States over content produced by AI models.

AI hallucinations show no signs of disappearing, which means chatbots will continue generating problematic content that exposes AI companies to legal action.

Courts are slowly working through how to handle these cases. The issues present legal challenges before they present technical ones, raising questions about who will ultimately be responsible for solving these problems.

Peter Henderson, a professor at Princeton University, told The Economist that the question of AI company liability for false generations will almost certainly reach the Supreme Court.

In a 2023 case against Google, Supreme Court Justice Neil Gorsuch stated that Section 230 protection does not apply to AI-generated content.

If Section 230 protections fail to shield AI companies, The Economist warns that AI developers may claim that chatbots have free speech rights.